Figure 9 from Intelligence Beyond the Edge: Inference on Intermittent Embedded Systems | Semantic Scholar

How Acxiom reduced their model inference time from days to hours with Spark on Amazon EMR | AWS for Industries

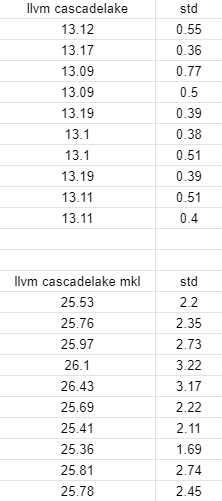

Expected vs. observed inference time for VGG-16 on an Intel Core i7... | Download Scientific Diagram

System technology/Development of quantization algorithm for accelerating deep learning inference | KIOXIA

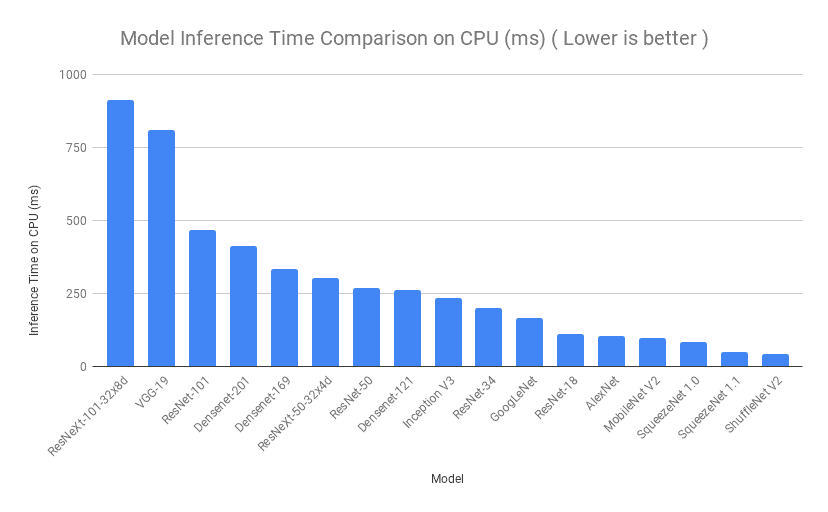

Efficient Inference in Deep Learning — Where is the Problem? | by Amnon Geifman | Towards Data Science

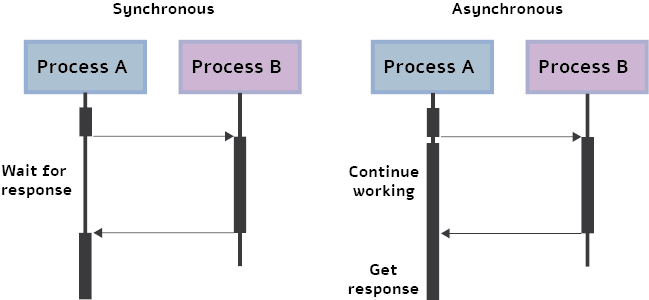

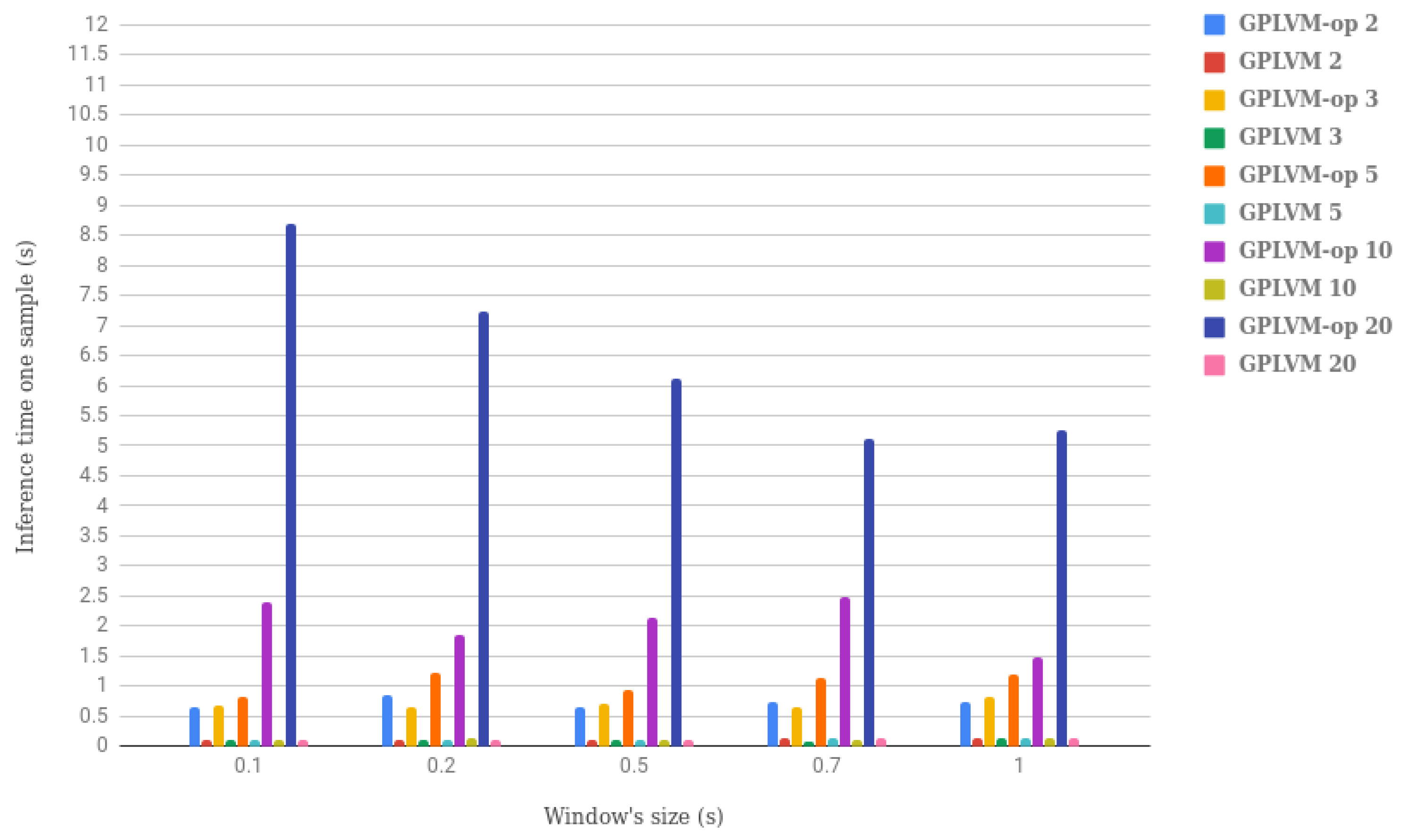

Electronics | Free Full-Text | On Inferring Intentions in Shared Tasks for Industrial Collaborative Robots | HTML

![PP-YOLO Object Detection Algorithm: Why It's Faster than YOLOv4 [2021 UPDATED] - Appsilon | Enterprise R Shiny Dashboards PP-YOLO Object Detection Algorithm: Why It's Faster than YOLOv4 [2021 UPDATED] - Appsilon | Enterprise R Shiny Dashboards](https://appsilon.com/wp-content/uploads/2020/09/pp-yolo-frameratevsmethod.png)

PP-YOLO Object Detection Algorithm: Why It's Faster than YOLOv4 [2021 UPDATED] - Appsilon | Enterprise R Shiny Dashboards

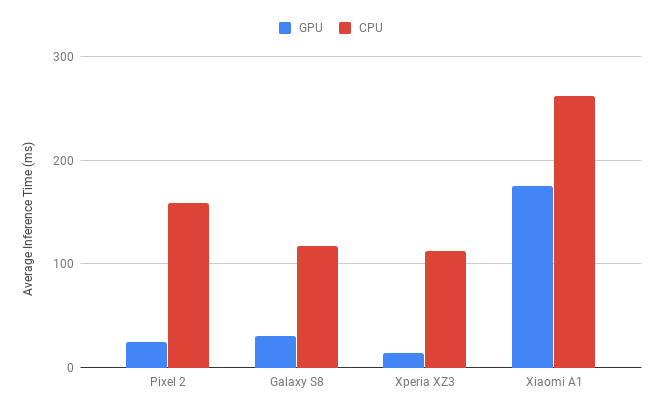

Efficient Inference in Deep Learning — Where is the Problem? | by Amnon Geifman | Towards Data Science

![PDF] 26ms Inference Time for ResNet-50: Towards Real-Time Execution of all DNNs on Smartphone | Semantic Scholar PDF] 26ms Inference Time for ResNet-50: Towards Real-Time Execution of all DNNs on Smartphone | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/2824181f87c6d047b81801faf1652310b98b1cad/3-Figure2-1.png)

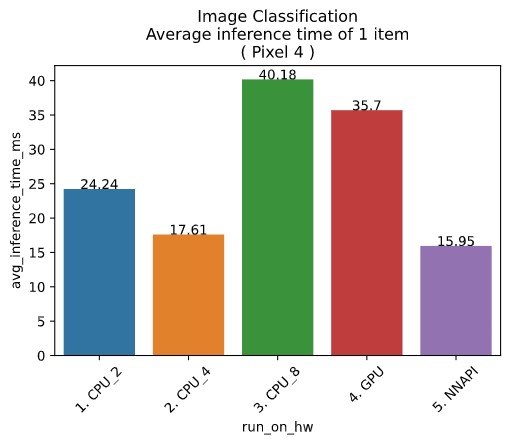

PDF] 26ms Inference Time for ResNet-50: Towards Real-Time Execution of all DNNs on Smartphone | Semantic Scholar

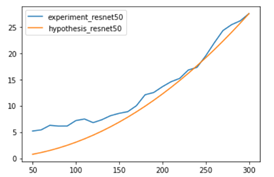

Difference in inference time betweeen resnet50 from github and torchvision code - vision - PyTorch Forums

The Correct Way to Measure Inference Time of Deep Neural Networks | by Amnon Geifman | Towards Data Science